Assume there are many columns in a data frame that are of string type but always have a value of “N” or “Y”. You would like to scan a column to determine if this is true and if it is really just Y or N, then you might want to change the column type to boolean and have false/true as the values of the cells.

What the Below Code does:

1. Collects the Column Names and Column Types in a Python List

2. Iterate over a for loop and collect the distinct value of the columns in a two dimensional array

3. In the Loop, check if the Column type is string and values are either ‘N’ or ‘Y’

4. If Yes ,Convert them to Boolean and Print the value as true/false Else Keep the Same type.

PySpark Code:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| df = sc.parallelize([(1, 'Y','F',"Giri",'Y'), (2, 'N','V',"Databricks",'N'),(3,'Y','B',"SparkEdge",'Y'),(4,'N','X',"Spark",'N')]).toDF(["id", "flag1","flag2","name","flag3"]) | |

| print 'Show Dataframe' | |

| df.show() | |

| print 'Actual Schema of the df' | |

| df.printSchema() | |

| for a_dftype in df.dtypes: | |

| col_name = a_dftype[0] | |

| col_type = a_dftype[1] | |

| # print df.select(col_name).collect()[0][0] | |

| if col_type=='string' and (df.select(col_name).distinct().collect()[0][0] =='N' or df.select(col_name).distinct().collect()[0][0] =='Y'): | |

| df = df.withColumn(col_name,df[col_name].cast("boolean")).drop(df[col_name]) | |

| else: | |

| df = df.withColumn(col_name,df[col_name]).drop(df[col_name]) | |

| print 'df with True/False Value after Data Type changes' | |

| df.show() | |

| print 'Modified Schema of the df' | |

| df.printSchema() |

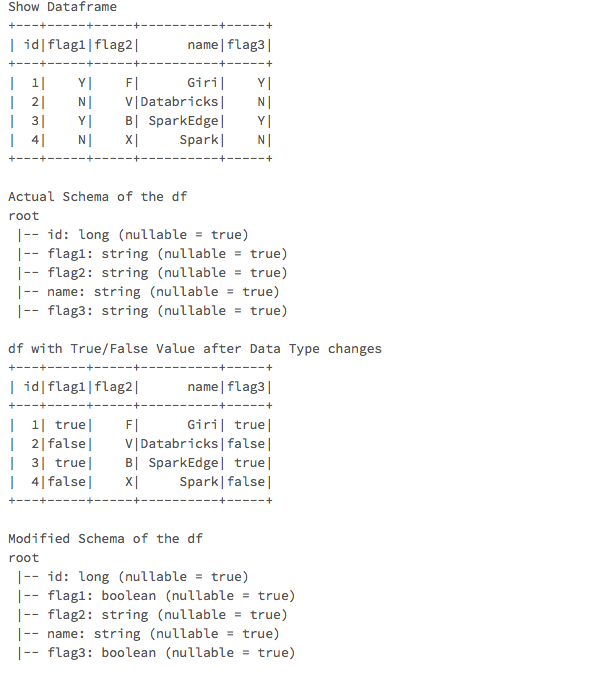

See the Output:

I have attached the snapshot of the results. Please look at the columns flag1,flag3 converted to true or false and rest of the columns are printed with the original value.

Is there an alternative for this in scala

LikeLiked by 1 person

You can use pivot function in Spark. https://databricks.com/blog/2016/02/09/reshaping-data-with-pivot-in-apache-spark.html

LikeLike

or do a custom code to loop through all columns.

LikeLike

Thank a lot. I was able to achieve the results.

LikeLiked by 1 person